One of the best things you can do to boost conversions on an ecommerce site is to have a fast, relevant search feature. Give customers a good search and you'll see a lot more journeys that begin with a search and end with a purchase. If I take this too far, I'd like to see what would happen if some popular online store replaced the homepage with just a prominent search field. That would probably win the prize for the most motherf***ing website ever, but I doubt any large brands will ever do that. Aside from digressions, search is important and it's one of the things that's changing faster in the ecommerce ecosystem, also thanks to AI and recent technology shifts.

Technically speaking, any search functionality comes in two parts: a search query and a list of results. A search query is the customer's input, which is a word or sentence that describes what they're looking for, along with some filtering or sorting preferences. Results are sorted by relevance to the search query and options. Relevance is a ranking score, typically based on some algorithm such as TF-IDF or B25 that measures keyword frequency. Such a mechanism is called full-text search.

In the past decades, full-text search has been the foundation of every search engine and it works well for most cases. Its biggest flaw, though, is that this type of search search is too deterministic and doesn't understand search intent. Since human language is so diverse and people are so different, it's difficult to predict what keywords will be used to search for a product or service. As a result, people often don't get what they're looking for and in the most extreme case, they get nothing. It's the infamous zero results page that all marketers try to avoid at all costs.

Enter semantic search, which offers relevant results based on a customer's search intent instead of just keywords. Semantic search engines transform terms into embeddings, which are arrays of values (vectors) that represent each term. Transformations are done using LLMs (Large Language Models) that process the index documents and store the embeddings into a vector database. When a search query is entered, the same LLM converts it into a vector and a similarity search is run against all the embeddings. For more details on how it works, I wrote about it in my previous article here.

Rather than matching keywords with records of the index, semantic search tries to give the user the most relevant results by interpreting their search query. If the model is well trained, it will return relevant results more often, so the chances of getting zero results will be much lower.

Furthermore, the search engine might generate related content such as a summary of search results using generative AI. By doing this, the user not only gets what they want, but also is inspired by contextual, personalized information that makes the whole experience even more immersive.

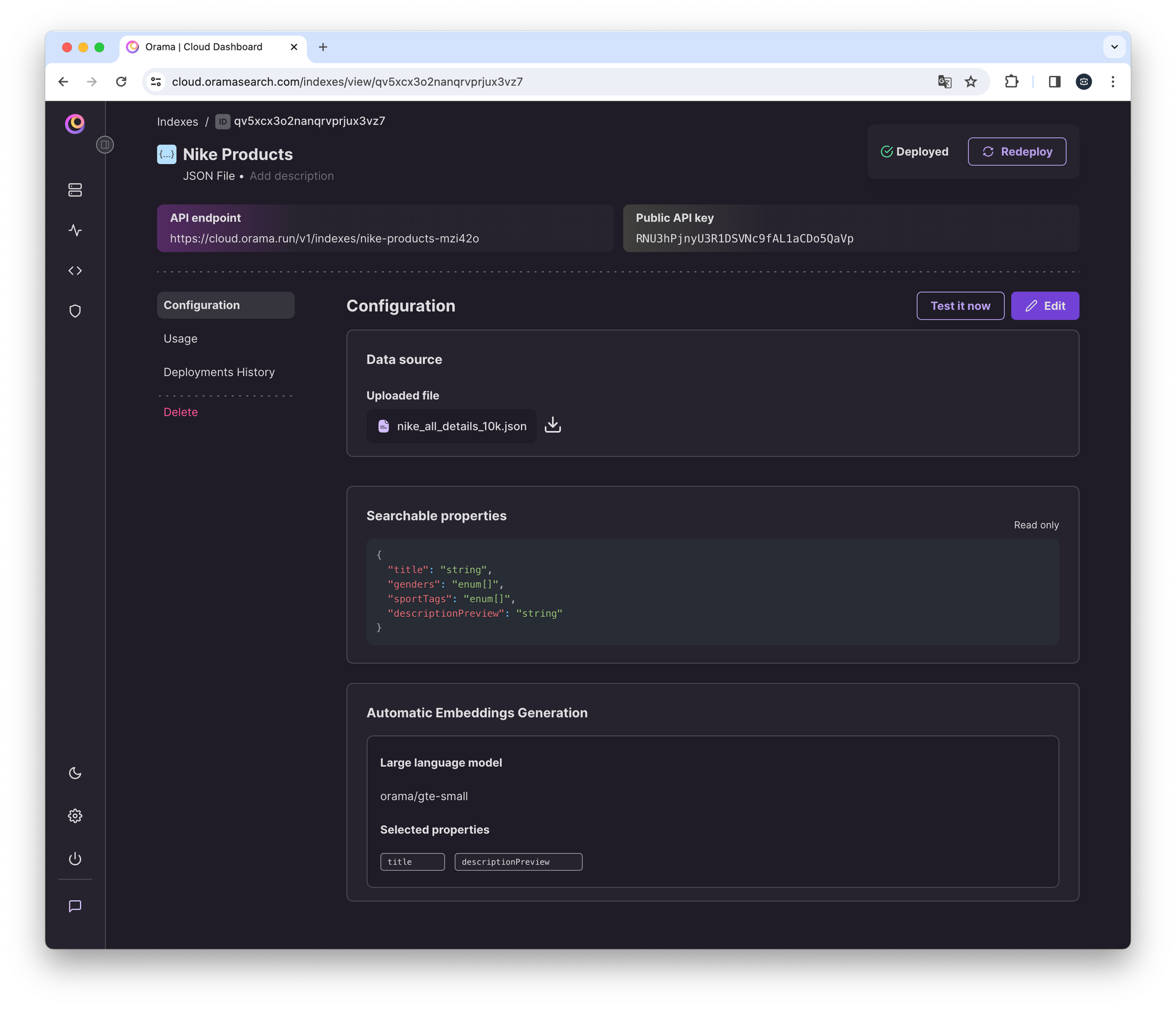

To see semantic search in action, I tried Orama, a very interesting project that democratizes search with a developer-first ethos. It only took me minutes to sign up for their cloud service, upload ten thousand products, and deploy my index to more than 300 edge nodes. For semantic search, I let the engine create embeddings automatically and store them in their vector database. As the LLM, I just picked one of the options.

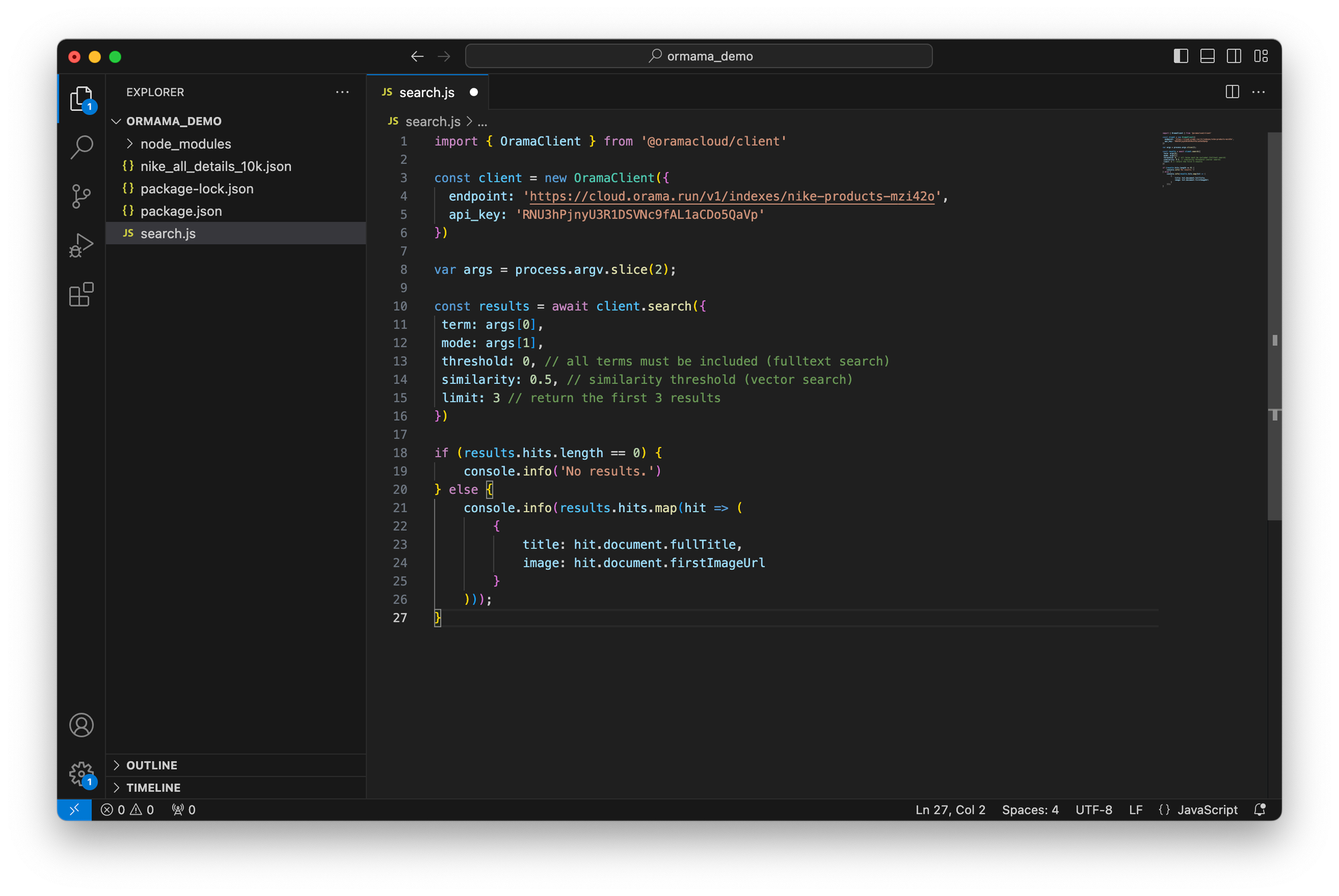

Then I got a public API key and an endpoint that I used in the snippet below:

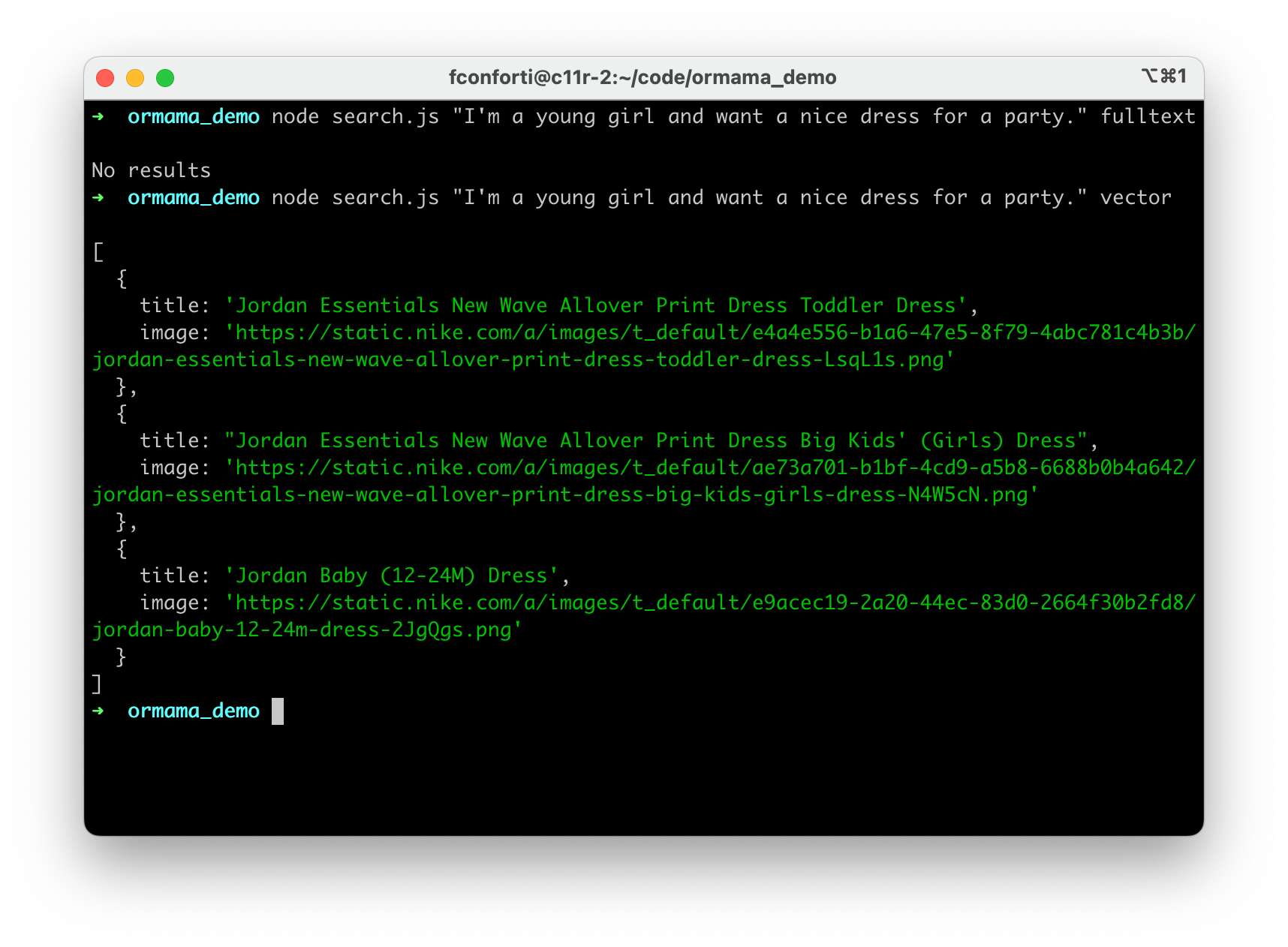

The snippet takes an argument to switch between full-text and semantic search. Only the top three products will be returned. I ran it on my local machine with the same search term and got the following results:

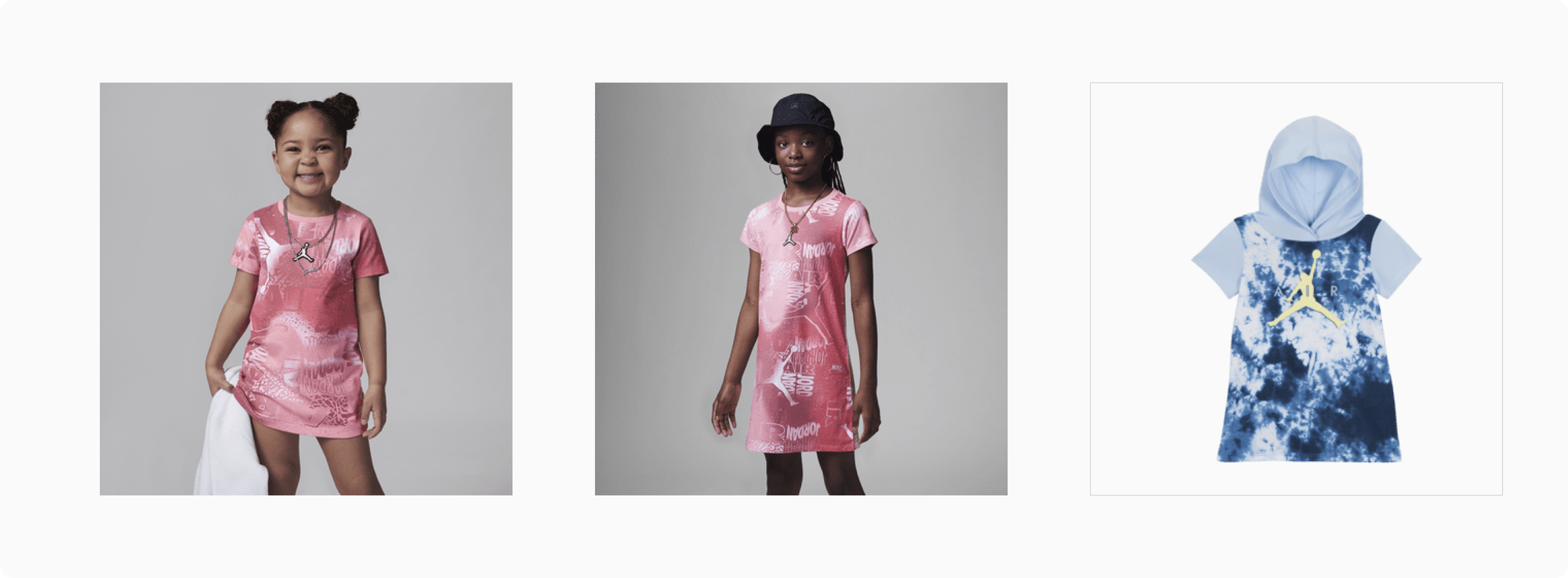

As you can see, full-text search returns no results, because no documents in the index contain all the search terms of "I'm a young girl and want a nice dress for a party". Instead, vector search nicely interprets my search intent and returns quite relevant results (pictures below).

Obviously, I just scratched the surface, but it's an interesting use case worth exploring further. My next step is to play around with different LLMs and search terms to see how it goes. If you want to try it yourself, you can create a free Orama Cloud account here or explore their open source repo on Github.