Imagine that you want to build an AI chatbot which can retrieve data from external sources and take actions in external systems. Your chatbot could place an order based on a user's prompts, for example, or retrieve a placed order so it can inform the user of its fulfillment status.

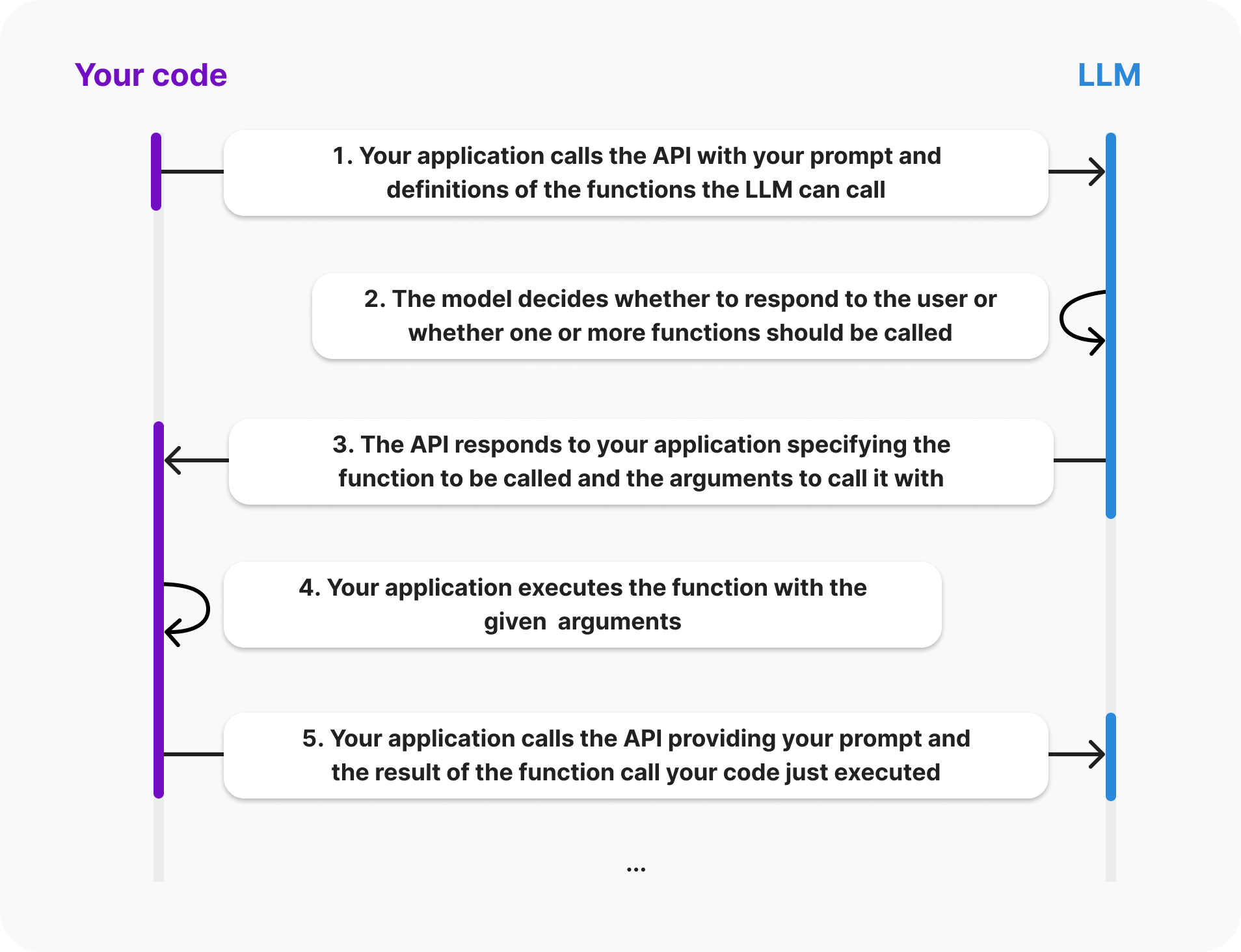

By using OpenAI, you can call functions and connect large language models (LLMs) to external APIs. Below is an image from OpenAI's official documentation that illustrates the process of calling a function.

As a first step, your application sends a prompt and defines the functions the LLM can use to respond to the prompt. A function definition contains an identifier and the parameters needed to call it.

You define the actual function in your codebase, and the LLM does not need to know anything about its implementation. If the LLM decides that the function should be called, it will send a message to your code with the function identifier and the arguments that have been extracted from the conversation. Your code will execute the function with the provided arguments and send the function results back to the LLM, which in turn will pass the output to the user.

Essentially, the LLM does not execute any actions. There is nothing magical about it. AI chatbots cannot fetch data directly from databases or call APIs to external systems. Those actions are executed by your code base, as they have always been. Instead of using forms and web pages, the LLM allows you to use natural language to ask the user for function parameters and to show the results in a conversational manner. This is very powerful and allows users to interact in a completely new way.

Incorporating a functions toolset into an AI chatbot can turn it into a fully operational sales assistant that can recommend products to customers, handle shopping carts, receive payments, and provide information about order history—all while chatting about the weather, just like a human sales assistant would do in a physical store.